Founded in March 2018 with headquarters in Cotonou, Benin, Atlantic Artificial Intelligence Laboratories (Atlantic AI Labs™) is fostering research, education, and implementation of AI and related technologies in Africa (see press release). Unraveling the secrets of human intelligence is one of the grand challenges of science in the 21st century. It will require an interdisciplinary approach and may hold the key to building intelligent machines that can interact safely and efficiently with humans to help solve a wide range of complex problems.

We are interested in unleashing the potential of AI in Africa through self-reliance. At present, Atlantic AI Labs™ comprises the AI for Agriculture (A4A), the AI for Biomedicine (A4B), and the AI for Control (A4C) Labs. This is part of our "Africa 2060" initiative of transforming Africa from an exporter of raw materials to a unified, inclusive, and sustainable center of high-tech research, development, and production by the year 2060. We see AI as the catalyst for leapfrogging into this 4th Industrial Revolution.

We are Africans solving African problems with AI. Our guiding principles are: Innovation, Collaboration, and Excellence.

In addition to building a team of talented AI researchers and engineers, Atlantic AI Labs™ will also promote early mathematics and science education and offer AI Research Fellowship and Residency Programs in collaboration with African universities.

About usWhat we do.

Education

Education State-of-the-art AI Methods

State-of-the-art AI Methods Aerospace

Aerospace Analysis and Modeling

Analysis and Modeling Software Development

Software Development Enterprise AI

Enterprise AI Biomedicine

Biomedicine Precision Agriculture

Precision Agriculture Environmental Protection

Environmental ProtectionA passionate team of creative & innovative minds.

We are a multidisciplinary team passionate about researching and working toward the long term goal of Artificial General Intelligence (AGI).

Our goal is to use AI to improve people's lives and health. Our current focus is on AI, Cognitive Robotics, and Autonomy with applications in aerospace, biomedicine, and agriculture.

Slowly but surely, the chameleon reaches the top of the bombax tree.

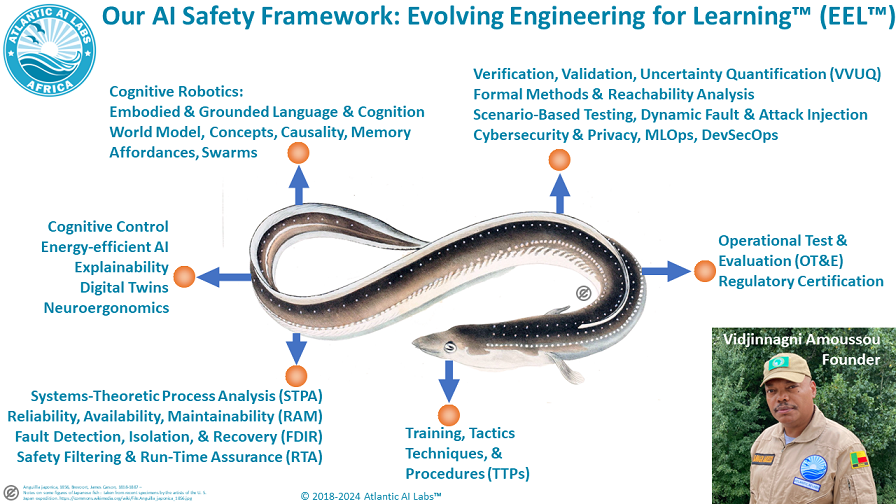

Evolving Engineering for Learning (EEL) is Atlantic AI Labs' framework toward AI trustworthiness and safety. EEL is inspired by the aviation concept of "airworthiness" which has made air travel the safest mode of transportation by far. EEL is just good old engineering evolving to support learning-enabled, autonomous, adaptive, high-dimensional, highly non-linear, partially observable, and non-deterministic behavior under uncertainties.

Learn more about EEL on our blog at Evolving Engineering for Learning (EEL) toward Safe AI.

Experience and wisdom.

Founder & Director

Chief Operating Officer

Our AI Implementation Methodology

The Pendjari Park in Benin is the last refuge for the region's largest remaining population of elephant and the critically endangered West African lion.

- African ParksGet in touch with us, for business or to submit your resume!

By email: vidjinnagni.amoussou@atlanticailabs.com; on Twitter: @atlanticailabs